Zauntee is a new age rapper from Tampa.

Exploring the potential of YouTube as a learning tool, this article delves into whether it’s possible to master Bitcoin trading through the platform.

Ever since the introduction of OpenAI’s ChatGPT, followed by a string of equally buzzing generative tools, controversy has been rife in the art circle.

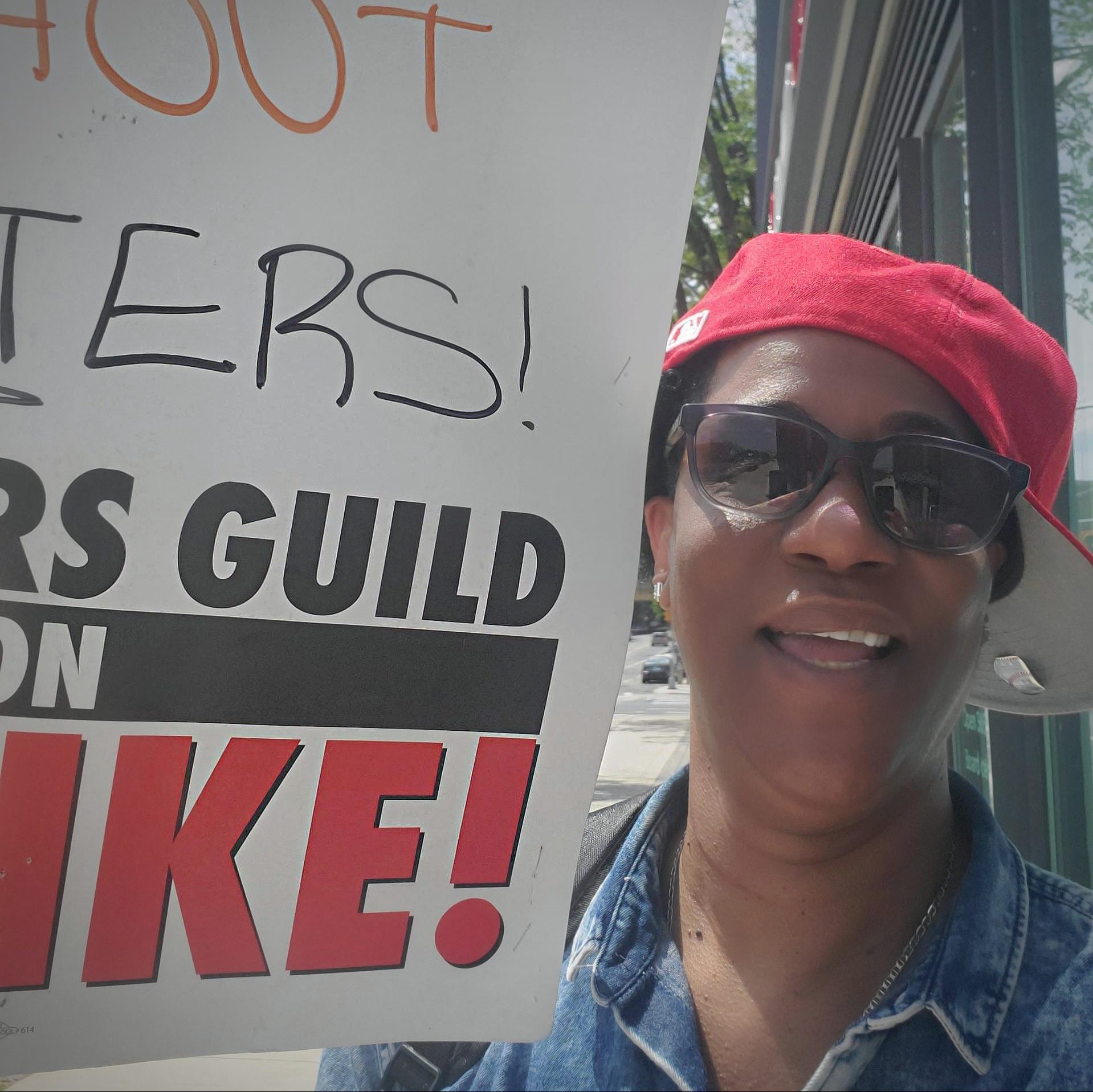

Now visible state-to-state are the unseen writers’ of TV and Film on strike.

During these tough economic times, we’ve decided to focus on practical, affordable gifts.

The future is coming and the current world is changing to keep up with it. Artificial Intelligence (AI) refers to highly developed systems that can be programmed to do something – which is perfect for the working world.

The travel and hospitality sectors are in the midst of a massive technological transformation that will affect how you book travel and stay at hotels moving forward. Tech savvy customers will come to expect the convenience that artificial intelligence brings. This vision is what I like to call ‘high-tech automation with high-touch personalization and customization.’ […]

“Lineage for a Phantom Zone marks an important milestone in Rolls-Royce’s creative history. The newly commissioned work by artist Sondra Perry, sees Muse, the Rolls-Royce Art Programme’s inaugural award, the Dream Commission, come to fruition. Initiated to advance the medium of moving image art, the Dream Commission has consisted of a two-year process during which […]

Banner Public Affairs is excited to announce the launch of BannerAI – a new artificial intelligence platform that further amplifies Banner’s ability to pair the right reporters with the right story, amongst other expanded capabilities. Custom-built to meet our client’s needs, our tool combines IBM Watson and AI Natural Language Processing to analyze a database […]

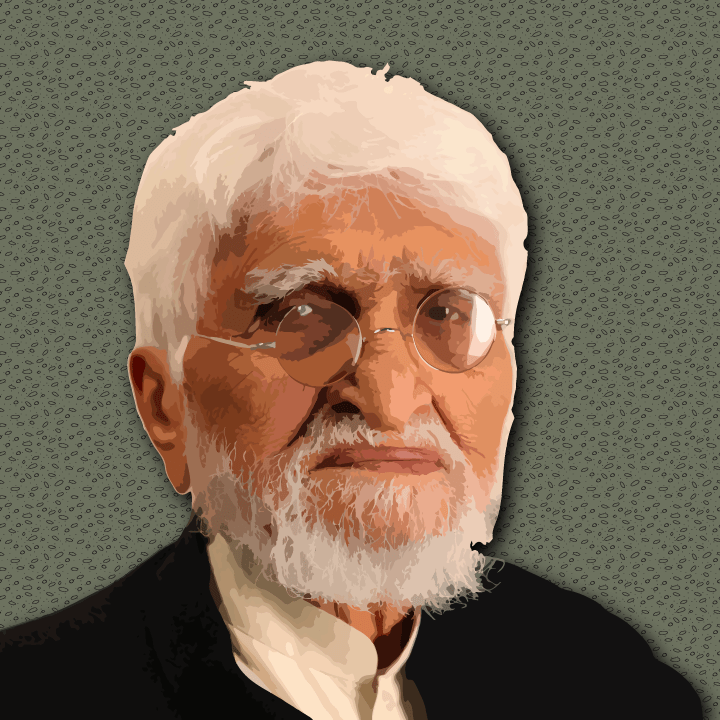

India’s Newest Museum Allows Conversation With A Dead Artist Via AI The Museum of Art and Photography and Accenture Labs have collaborated to create India’s first conversational digital persona of the celebrated artist, M F Husain. This unique digital experience was created during Covid 19 lockdowns as a way to feel engaged while experiencing art. Husain’s […]